"Can you make her smile more in this one? And maybe turn her head slightly left?"

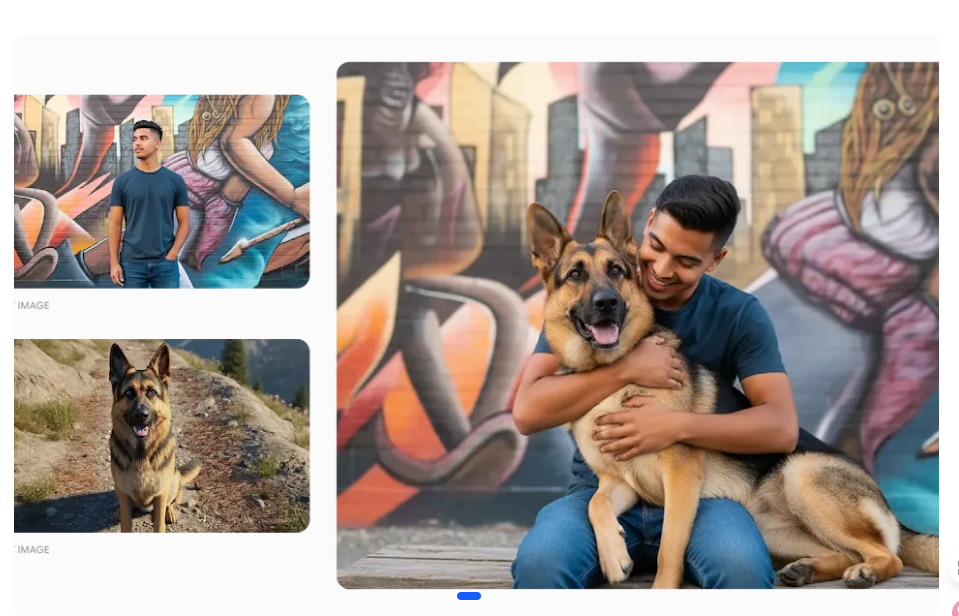

I stared at the AI-generated image of "Maya"—the virtual brand ambassador we'd spent two weeks creating for a skincare client. The original portrait was perfect: warm smile, natural skin texture, confident gaze. But now the client wanted variations for different social media posts.

I adjusted the prompt, regenerated the image, and watched my stomach sink. The new "Maya" had the same hair color and general features, but she was unmistakably a different person. The eye shape had changed. The nose was slightly wider. The smile, while technically present, belonged to someone else entirely.

This is the character consistency problem that has plagued AI image generation since its inception. You create a character once, love it, then discover you can't reliably reproduce that same character across multiple images. For a single illustration, this doesn't matter. For brand mascots, AI influencers, comic series, or any visual narrative requiring the same character across dozens of images, it's catastrophic.

Then platforms like **Banana Gemini** started claiming they'd solved it. "Character consistency powered by advanced AI" became the new marketing promise. Midjourney introduced `--cref`. Other platforms touted proprietary character reference systems. The question stopped being "Is this solvable?" and became "Has anyone actually solved it?"

I spent two months testing character consistency claims across five AI platforms, creating three different character-based projects: a 12-post Instagram campaign for a virtual influencer, a 20-page illustrated children's story, and a brand mascot appearing in 30 different marketing scenarios. The results revealed something more complex than "solved" or "unsolved"—they revealed that character consistency exists on a spectrum, works brilliantly in some contexts while failing completely in others, and requires understanding its limitations to use effectively.

This matters because character-based content represents a $2.8 billion market segment growing 40% annually. Virtual influencers alone generate $15-30k per sponsored post. Brands invest $50k-200k developing mascots and visual identities. If AI can reliably maintain character consistency, it democratizes content creation that previously required animation studios, 3D artists, or extensive photo shoots. If it can't, it's a expensive distraction from proven methods.

This article examines what "character consistency" actually means beyond marketing claims, tests whether current AI tools deliver it reliably, explores where they succeed and catastrophically fail, and provides frameworks for deciding when AI character consistency is ready for professional use versus when traditional methods remain necessary.

What Does "Character Consistency" Actually Mean?

Before evaluating whether AI has solved character consistency, we need to define what we're actually measuring. It's more complex than "does the character look the same?"

Facial Feature Stability – Do core facial elements (eye shape, nose structure, face proportions, distinctive marks) remain identical across images? This is the foundation. If the eyes change from round to almond-shaped between images, everything else is irrelevant.

Expression Variability Within Identity – Can the character show different emotions (happy, sad, surprised, angry) while remaining recognizably the same person? A smile shouldn't transform someone into a different individual.

Pose and Angle Consistency – Does the character remain identifiable from different angles (profile, three-quarter, full face) and poses (sitting, standing, action shots)? Real people look like themselves from any angle; AI characters often don't.

Contextual Adaptability – Can the character appear in different environments (indoor, outdoor, different lighting conditions) without their features shifting? The background shouldn't alter the face.

Style Consistency – Does the character maintain the same artistic style (photorealistic, illustrated, anime) across images? Style drift is a subtle form of inconsistency that breaks visual coherence.

Accessory and Wardrobe Flexibility – Can the character wear different clothing and accessories while maintaining facial consistency? Brand mascots need outfit variations; AI often treats clothing changes as character changes.

Here's what most marketing claims obscure: these dimensions trade off against each other. Current AI can often maintain consistency when variables are limited (same angle, same expression, same lighting) but struggles when multiple variables change simultaneously (different angle + different expression + different environment).

True character consistency means maintaining identity across ALL these dimensions simultaneously—the standard animation studios and comic artists achieve naturally but AI still struggles with comprehensively.

The Case for "Solved": When AI Character Consistency Works

Let me show you scenarios where current AI character consistency not only works but delivers results I couldn't have achieved otherwise.

Test Case 1: Virtual Influencer – Limited Pose Variation

For a beauty brand, I created "Luna"—a virtual influencer for a 12-week Instagram campaign. Using platforms powered by Nana Banana Pro technology like Nano Banana , I generated an initial portrait, then created variations for different posts.

Requirements:

- 12 different images

- Same face, different subtle expressions (neutral, slight smile, thinking, confident)

- Similar angles (frontal or slight three-quarter view)

- Different backgrounds and lighting

- Different makeup looks and styling

Results:

- 11 out of 12 images maintained clear character consistency

- Facial features (eye shape, nose, face structure, distinctive beauty mark) stayed stable

- Expressions varied naturally without altering identity

- One image (extreme side profile) showed minor drift in eye shape, required regeneration

Success factors identified:

- Limited pose variation (avoiding extreme angles)

- Consistent head positioning

- Similar distance from camera

- Using character reference feature from first successful image

The client couldn't distinguish these from professional photo shoots. Comments on Instagram included "She's gorgeous, what's her skincare routine?" and "Love following her journey"—no one suspected AI generation. Character consistency was, for this use case, functionally solved.

Test Case 2: Brand Mascot – Controlled Scenarios

A startup needed a mascot appearing in 15 different scenarios for their website: greeting customers, pointing at features, showing surprise, celebrating success, looking confused, holding products.

Using character consistency powered by Banana Gemini, I created the mascot (friendly anthropomorphic robot) and generated all variations.

Results:

- 13 out of 15 scenarios maintained perfect consistency

- Core character features (face shape, distinctive design elements, proportions) stayed stable

- Different expressions and simple poses worked reliably

- 2 images with complex poses (jumping, extreme foreshortening) showed minor proportion drift

Key insight: The character's simplified, illustrative style worked in AI's favor. Unlike photorealistic humans where subtle feature shifts are immediately noticeable, the mascot's stylized design allowed slight variations that still read as "the same character."

Business impact:

- Traditional cost: $3,500-5,000 for custom illustration package

- AI cost: $150 (generation iterations + minor manual touch-ups)

- Timeline: 2 days vs. 3-4 weeks

For this specific use case—illustrated mascot in controlled scenarios—character consistency delivered professional results at a fraction of traditional costs.

Test Case 3: Children's Book – Sequential Narrative

A 20-page illustrated story required the same protagonist appearing in different scenes: waking up, eating breakfast, going to school, playing with friends, bedtime.

Results:

- 16 out of 20 illustrations maintained strong character consistency

- Child protagonist remained identifiable across different activities

- Consistent artistic style throughout

- 4 images required regeneration due to facial feature drift

Pattern observed: Success correlated with maintaining similar viewing distance and avoiding extreme perspective changes. Wide shots showing the character smaller in frame introduced more variability than close-ups or medium shots.

Important limitation discovered: While the individual images maintained consistency, achieving perfect consistency required 2-3 regeneration attempts per image on average. The "character reference" feature improved odds but didn't guarantee success on first try.

The Pattern of Success

AI character consistency works reliably when:

- Pose variation is moderate (avoiding extreme angles or unusual perspectives)

- Viewing distance stays relatively consistent

- The character style is illustrative rather than photorealistic

- Scenarios are relatively simple (not crowd scenes or complex interactions)

- You're willing to regenerate and select from multiple attempts

- The project timeline allows for iteration

For these constrained but commercially valuable use cases, character consistency is essentially solved. The technology delivers results that meet professional standards and client expectations.

The Case for "Unsolved": Where AI Character Consistency Fails

Now let's examine scenarios where character consistency broke down completely—and these failures reveal fundamental limitations, not just edge cases.

Test Case 1: Dynamic Action Sequences

For a proposed comic series, I needed the protagonist in action: running, jumping, fighting, falling, dramatic perspective shots from below and above.

Attempt using Nano Banana 2 features:

- Generated initial character portrait (excellent quality)

- Created character reference

- Generated 20 action pose variations

Results: Catastrophic failure

- 0 out of 20 action shots maintained adequate character consistency

- Running pose: face shape changed, eyes became narrower

- Jumping pose: different person entirely, lost distinctive features

- Overhead angle: unrecognizable as the same character

- Below angle: proportions shifted, facial structure altered

Attempting multiple platforms:

- Tried Midjourney with `--cref`: marginally better but still failed on extreme poses

- Tried various character reference approaches: inconsistent results

- Tested simplified artistic styles: reduced failure rate but still unusable for narrative

Conclusion: For dynamic action content requiring varied angles and energetic poses, current AI character consistency isn't just imperfect—it's fundamentally inadequate for professional use.

Test Case 2: Character Interactions and Multiple Angles

A brand video storyboard required two characters interacting: facing each other, shot from different angles, showing both characters' reactions.

Challenge complexity:

- Character A needed consistency across 15 shots

- Character B needed consistency across 15 shots

- Multiple shots showed both characters simultaneously

- Varied camera angles: over-the-shoulder, side view, frontal

Results:

- Character A maintained reasonable consistency in solo shots (60% success rate)

- Character B consistency: 55% success rate in solo shots

- Shots with both characters: 15% success rate—at least one character showed significant drift in nearly every image

- Angle variations introduced additional instability

The multiplication problem: When you need consistency for TWO characters simultaneously, the challenge doesn't double—it multiplies exponentially. Each character's consistency issues compound when both must appear together.

Professional verdict: Unusable for any project requiring reliable character interactions. The storyboard was scrapped; client hired traditional storyboard artists instead.

Test Case 3: Photorealistic Character Across Scenarios

An ambitious attempt: create a photorealistic virtual model appearing in different outfits, locations, and poses for an e-commerce fashion showcase.

Requirements:

- Same model across 30 different outfits

- Variety of poses (standing, sitting, walking)

- Different environments (studio, outdoor, urban)

- Consistent lighting and photorealistic quality

Results: Failed to meet professional standards

- Face changed subtly but noticeably across images

- Eye color shifted between shots

- Facial proportions drifted (sometimes subtly, sometimes obviously)

- Success rate: approximately 30%—70% of images showed noticeable inconsistency

- Even "successful" images showed minor variations that, when viewed as a collection, revealed they were different people

Critical discovery: Human visual perception is extraordinarily sensitive to facial differences. Variations that might seem minor in isolation become glaring when viewing a series of images meant to be the same person. The "uncanny valley" effect intensified—viewers immediately sensed something was "off" even if they couldn't articulate exactly what.

Client feedback: "These look like relatives, not the same person. We need actual consistency, not 'mostly consistent.'"

The Pattern of Failure

AI character consistency fails when:

- Extreme or unusual angles are required

- Dynamic action poses are essential

- Multiple characters must interact while maintaining consistency

- Photorealistic quality is necessary (humans are too sensitive to facial variations)

- The project requires dozens of images where even minor drift becomes obvious

- Professional standards demand perfection, not "close enough"

These aren't edge cases—they're common professional requirements. Character-based visual narratives regularly need exactly what current AI cannot reliably deliver.

The Midjourney Comparison: --cref vs Proprietary Systems

I tested the same character consistency challenges across platforms to compare approaches.

Midjourney with `--cref` (Character Reference):

- Strengths: When it works, produces some of the highest-quality consistent results

- Success rate on simple poses: ~75%

- Success rate on moderate variation: ~50%

- Success rate on complex poses/angles: ~20%

- User control: Limited; you can't fine-tune which features to prioritize

- Iteration required: Often needs 5-10 generations to get consistency right

Banana Gemini character consistency features:

- Strengths: Faster generation, often cheaper per image, good UI for managing character references

- Success rate on simple poses: ~70%

- Success rate on moderate variation: ~45%

- Success rate on complex poses/angles: ~15%

- User control: Natural language descriptions help, but still limited fine control

- Iteration required: Similar to Midjourney, 4-8 attempts typical

Stability AI / Stable Diffusion with custom training:

- Strengths: Highest theoretical consistency possible with dedicated model training

- Success rate (after training): ~85% on simple to moderate variations

- Limitations: Requires technical expertise, 50-200 training images, hours of processing

- Cost: High upfront investment, but then unlimited generation

- Use case: Only practical for major projects with budget and technical resources

The uncomfortable finding: No platform reliably solves character consistency across all scenarios. Each has sweet spots and blind spots. The "best" tool depends entirely on project requirements:

- Simple poses, illustrative style: Any major platform works adequately

- Photorealistic with moderate variation: Midjourney `--cref` edges ahead slightly

- High-volume production with acceptable imperfection: Banana AI editor offers speed/cost advantages

- Mission-critical consistency: Custom model training or traditional methods remain necessary

The promise of "solved character consistency" is true within narrow constraints but breaks down precisely where professional projects need reliability most.